Computer Forensics: Big Data Forensics

What is Big Data?

In the computing world, there’s data—and then there’s big data. Described as a collection of information from traditional and digital sources of all kinds, big data is the pool of potential knowledge companies use for ongoing discovery and analysis. As consumers, businesses, and governments look for ways to expand the role of information— unequivocally one of the most valuable assets in today’s world—they inevitably turn to the potential of big data. Whereas it was once archived or even ignored, it has become increasingly precious to industry leaders who regard it as a valuable natural resource in today’s digital world.

The information contained in big data comes in to different forms:

Learn Digital Forensics

Unstructured data is simply text-heavy information that isn’t easily organized or interpreted by traditional data models, like tweets or other social media posts.

Multi-structured data comes in a wide variety of formats and types, all of which are generated through the user / device relationship, such as web log data containing text and images along with some structured data like transactional information. As the digital world continues to transform communication and its channels, this type of big data will continue to grow and change.

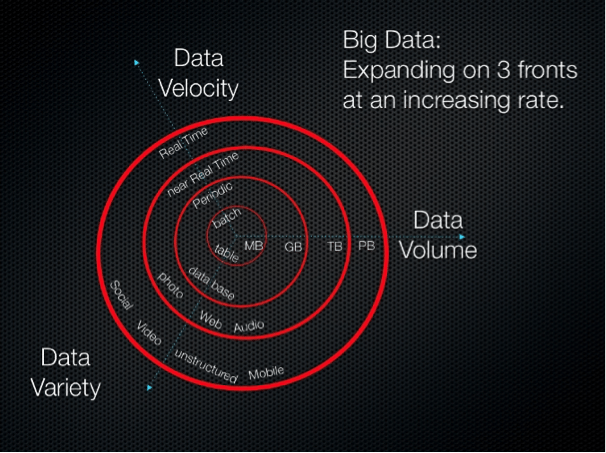

The 3Vs

There are three defining properties of big data known as the 3Vs: volume, variety, and velocity. They are the main characteristics that shape this pool of information, and as such, they are also the ones that pose the biggest challenges to its overall management. These three properties together are what constitute the intimidating scope of big data and differentiate it from the old school data management practices of the past.

Volume: Big data collections are so extensive and complex, they literally overwhelm the application software tasked with handling them. Big data is particularly difficult to store, analyze, share, transfer, and most importantly, to keep secure. This volume of information is comprised of unstructured and multi structured data, both of which contribute to its voluminous and unwieldy mass. To visualize the mind-boggling size of big data, just consider the one billion people active on Facebook today, all of whom likely store more than a few images on their social media page. Each of these photographs alone comprises over 250 billion bits of information on the platform, which just one miniscule player in the gargantuan world of big data. And the more people around the world connect through various devices, the more information they share, and the more technology that is created to facilitate these relationships, the more its volume will swell in size.

Variety: The data of today barely resembles the rows and columns of yesteryear. From sensor data to images to email messages to encrypted packets, modern data is highly unstructured and different from application to application. It has written text, images, attachments—a wide variety of shapes and sizes. It does not fit easily in the neat fields of a spreadsheet or a database.

Velocity: As technology and the pace of doing business speeds up, so does the movement of big data. Today, information is pouring in from all directions, and the need to get a grip on it, let alone use it constructively, is more critical than ever. Regardless of the industry platform, this data has to be ingested, processed, filed, and somehow, retrieved. And to make matters crazier, it has to be secured.

Where Does Big Data Come From?

In most cases, big data on its own is meaningless, but when it is combined with relational information such as customer records, sales locations, or revenue figures, it has the ability to form new insights, decisions, and actions. It is generated through three main sources: Transactional information resulting from payments, invoices, storage records, and delivery notices; machine data gathered through the equipment itself, like web logs or smartphone sensors; and social data found in social media activity, such as tweets or Facebook likes.

What Is The Big Data Paradigm?

Just as the discovery of petroleum invited society to recognize new ways of doing business in the 19th century, the shifting paradigm of big data is now capturing the minds of individuals who can see its potential to open doors and create new possibilities. If knowledge is indeed power, then big data is the pathway to finding ultimate strength in any industry. Companies have access to more information than ever before—with a widening variety of sources, formats, and functions—all of which they must learn to maximize if they hope to compete in the modern world.

Big data has created a radical shift in how people think about research, storage, and how industries engage with the massive information now being generated. Businesses and governments can no longer view big data as just the byproduct of their efforts, but rather as their biggest asset. Any time there is a drastic change in something—in this case, it’s the evolution the 3Vs of big data—there must also be a fundamental adjustment in the way it is viewed, assessed, and used. This is the underlying premise of the big data paradigm shift.

What Are The Forensic Applications And Implications Of Big Data?

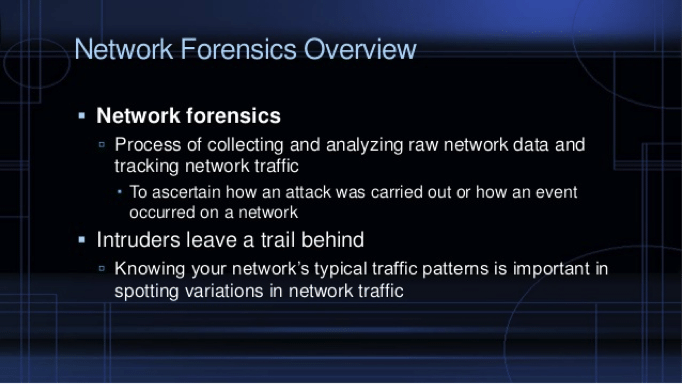

As a subset of information security and big data, network forensics involves capturing, recording, and analyzing activity on the network so as to locate attack sources, prevent them in the future, and even prosecute the guilty. Although these forensic capabilities have some overlapping functions with other tools, they are primarily focused on full packet capture, retention of data, quick access to held data, packet header analysis, and the reading of packet contents. Anything that brings the user closer to understanding the security situation, whether it be through binary extraction or document searches, is considered a piece of the digital forensics process. And because the 3Vs of big data offer so much room for insight, they are a natural fit for the overarching goal of any forensic endeavor. In fact, network forensics are really just another form of big data application.

Volume offers perspective. Big data is particularly helpful in the realm of Information Security because its vast landscape is the perfect place to identify unusual data patterns, like weird IP addresses or DOS attacks, that may affect the safety of the system. In this way, understanding big data strategies helps users avoid security breaches and establish stronger methods for prevention.

Velocity offers Reliability. Just like a detective searching for a criminal in the real world, network forensics calls for an ample supply of information, the more diverse and speedier the better. sources need to be valid, verifiable, and trusted for the process to be effective, which means digital forensics can rely on big data velocity to process immediately, expose patterns, and point to potential threats. And the sooner a threat is identified, the sooner it can be disrupted or blocked. As a result, the integration of network forensics with big data analytics allows for better automated response to security issues, real-time analysis, and the ability to address problems with integrity. It offers

Variety offers insight. There are no shortage of sinister payloads in the flow of big data, as it is flowing from a many places and in many different forms. When used as part of information security, network forensics requires the wide variety of data provided by all the network traffic.

Learn Digital Forensics

Understanding big data and its shifting paradigm is critical to effective implementation in any digital investigation. And as an information security tool, it can also be applied to incident prevention, as it can uncover potential security issues and automatically address them through measures like server isolation or data traffic rerouting. Because incident detection and response is an ongoing challenge for IT administrators, and the field of digital forensics as a whole, focusing on how to make big data less of a burden and more of a detective is an ideal solution.