Security Incident Response Testing To Meet Audit Requirements

Description: Practical guidance and tools to ensure maximum readiness for incident response teams including drill tactics. PCI-DSS audits often require IR testing validation; drill quarterly and be ready next audit cycle.

Introduction

Learn Incident Response

Learn Incident Response

![]()

Incident response teams in enterprise environments beholden to regulatory requirements can conduct drills that will help satisfy auditors and keep their incident handlers sharp.

A quick search through the latest PCI DSS, version 2.0, for the term "incident response" will reveal a number of requirements and testing procedures; following is a summary of those requirements.

In particular, 12.9 states "implement an incident response plan. Be prepared to respond immediately to a system breach." Subsections of this requirement dive deeper, including:

- 12.9.1 discusses an incident response plan inclusive of specific procedures.

- 12.9.2 requires testing the plan annually (I suggest quarterly, more on this below)

- 12.9.3 requires 24/7 personnel coverage to respond

- 12.9.4 indicates the need for appropriate training

- 12.9.5 specifies the need for monitoring alerts from IDS/IPS/FIMS

- 12.9.6 stresses modification and evolution of your response plan base on lessons learned

I propose a practice by which you can satisfy the testing procedures for the vast majority of PCI DSS 12.9, sustainable under audit, and useful for additional compliance requirements such as ISO 27001 and SAS70.

We'll make some assumptions as minimum requirements to achieve testing success:

- You have a documented incident response plan, ideally a fully realized standard operating procedure (SOP) that you keep current. To really make auditors happy, keep it versioned, update it at least biannually, and map it to NIST SP800-61 as much as possible; following standards strengthens your plan and procedures under scrutiny.

- You have defined incident response personnel and they are available to respond 24/7.

- You have monitoring mechanisms in place; IDS, IPS, FIMs or all of the above. You already receive alerts from these systems as part regular business.

- A moderate level of technical incident response capability on your IR team. If they're in house personnel this process is easier, but you can (must) require of it your vendor/consultant if you outsource your incident response function

First, while the PCI DSS states that an annual test is sufficient, I say step up your game. The more your drill, the better your team's incident response chops will be. Quarterly testing gives you an opportunity to improve between audit cycles should you have identified deficiencies.

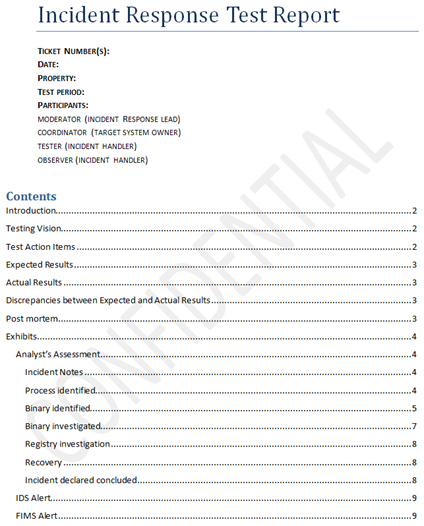

Second, as part of your drill, be prepared to fully document and report the process; ensure the report document is concisely written and includes methods, timelines, participants, and findings. Telling an auditor you conduct incident response drills without supporting documentation won't get you very far.

I suggest three mandatory roles, and an optional observer, for incident response drills:

- The moderator is usually the incident response team lead.

- The coordinator is usually the target system owner.

- The testers are your incident handlers.

- The observer typically rides shotgun with the tester.

Include these roles and their individual findings or summaries in your report. See Reporting below for more guidelines.

Incident Response Testing

Now, with pre-testing guidelines established, how can we conduct a drill that helps validate your capabilities as defined by PCI DSS 12.9?

Establish your "target" system(s) in advance. Depending on the size of your team, one or two hosts should suffice. For this article we'll operate with one target system.

The target system should be "in scope" for PCI, but not mission critical or in active rotation. It should definitely NOT be available to external traffic; I recommend the likes of a file server, or standby host such as a passive cluster participant or a host out of production rotation for patching/maintenance.

Clearly you'll need to work with the system owner, who should be identified in your after action report as the coordinator. You can decide together if you want to conduct a blind test or use a scheduled test window; you'll likely make this decision based on coverage and capability.

Once you (the moderator) and the coordinator have selected targets and the window of operation, you have to "compromise" the target host in preparation for escalation of the test incident to your handlers. Undertake this process just prior to the test event window if scheduled in advance, or in close cooperation with the coordinator for a blind test.

NOTE: You assume all the risks associated with introducing what is identified by most anti-virus providers as a Trojan backdoor to a host for incident response testing. Remember to disable and remove it upon conclusion of your test! InfoSec Resources and the author assume no responsibility.

I've used a many different tactics during the "compromise" phase; it's important to mix it up when you test quarterly in order to keep your handlers on their toes. You can combine any number of these ideas or build your own variations as long as they help validate your capabilities specific to the PCI or other requirement. Remember that you're playing with binaries that will likely cause antivirus to fire. You can choose to add temporary exclusions for the files and directories you are manipulating, or you can let the alerts fire, then allow the activity via the AV client, particularly if the alerts are monitored by your incident responders (more evidence for your after-action report).

In addition to planting a binary on a system, you can opt to do so in a manner that should trigger an alert from your FIMS solution, if in use. Additionally, you can configure your "backdoor" to set off IDS/IPS alerts, ultimately testing PCI DSS 12.9.5 all in one fell swoop.

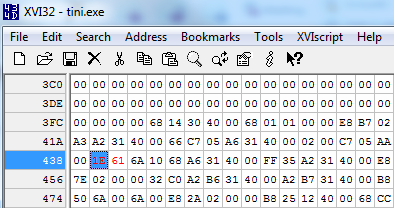

Netcat, Cryptcat, and Tini, the 3kb backdoor from NTSecurity, can all be utilized in a variety of ways but I favor Tini. Tini is a true backdoor (no other evil included) that listens on TCP port 7777 by default; you can edit the port value in a hex editor to a port of your choosing. To do so, open Tini in your favorite hex editor, search for 1E61, the hex value for decimal 7777, replace it with a value of your choosing (see Figure 1), and save it under a new temporary file name.

Here's where to unify efforts.

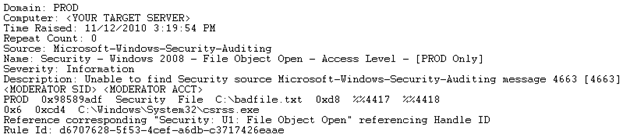

First, if you know that a test network is monitored by IDS/IPS, choose a port for Tini that will likely be flagged; as an example, hex 56CE for decimal 22222 can result in an alert generated by one of Snort's backdoor.rules when you connect to your Tini instance (see Figure 3 below).

Second, consider saving your updated version of Tini named for a known good system file, for two reasons.

-

A system that is being monitored to ensure integrity by a FIMS installation will likely flag the activity if, as an example, you've renamed your new version of Tini to csrss.exe and tried to copy it to C:WindowsSystem32, assuming FIMS is enabled to monitor csrss.exe or the System32 directory. Said alert might look something like Figure 2. Depending on the OS this activity may also be prevented by the likes of TrustedInstaller as well.

image: FIMS alert

image: FIMS alert - Mimicking the behavior of many malware samples, hide your Tini instance (now csrss.exe) on the target system somewhere that may not be prevented but that may challenge your handlers when its location is not immediately obvious. Tucking it in the likes of C:WindowsSysWOW64WindowsPowerShellv1.0 will require your handlers to conduct process, network stack, MAC times, and other analysis to find the planted backdoor.

You can take further optional steps to make the test more interesting and challenging by turning your renamed version of Tini into a service. Setting it to start automatically ensures that it runs even if the target system is rebooted, and a service named for or similarly to a known good system process/service may throw your handlers off the trail.

Following the naming convention we've established above, create said service as follows:

sc.exe create RuntimeSubsystem binPath= "C:WindowsSysWOW64WindowsPowerShellv1.0csrss.exe -k runservice" DisplayName= "Runtime Subsystem"

Just remember, clean up after yourself when the exercise is complete:

sc.exe delete RuntimeSubsystem should result in [SC] DeleteService SUCCESS

Then confirm:

sc.exe query RuntimeSubsystem should result in [SC] EnumQueryServicesStatus:OpenService FAILED 1060:

The specified service does not exist as an installed service.

Just prior to the beginning of the test period, connect to the backdoor. You can leave a hanging connection if you wish to leave a network stack bread crumb trail for your handlers to follow.

Said connection should lead to the above mentioned IDS/IPS alert, depending on your configuration, as seen in Figure 3.

Image: Connection to "backdoor" leads to alert.

Image: Connection to "backdoor" leads to alert.

If you're using IDS/IPS to monitor/block egress traffic from in-scope network, making an additional outbound connection attempt from your target server to an external host over an interesting service port such as 22 should trigger an alert as well.

At this point, you should have the coordinator escalate the "compromise" per your incident response plan. In an enterprise with mature monitoring and incident response, an escalation may not even be necessary; your "malicious" activity should set the incident response wheels in motion. If you're not going for a blind test, consider declaring the fact that it is a test in the escalation email or call, particularly in larger organizations with tiered support and response models.

Allow your handlers no more than a two hour window to get to the "root cause" of the test incident.

If you're interested in some of the security incident tooling and methodology I've utilized and prescribe for others, feel free to reach out to me via email.

Once your handlers have succeeded in identifying your planted backdoor it is vital that they remove it as part of the response process and document having done so. You, as the moderator, are obligated to confirm that the system has been cleaned up and returned to a pre-test state.

If your handlers fail to identify the backdoor for some reason, you are further obligated to ensure a complete cleanup, and document your process for planting the backdoor, as well as lessons learned derived from how your handlers failed to identify it.

Reporting

Once your test exercise is complete, you'll definitely want to button it up with a clean, legible, audit-worthy report. Figure 4 gives you the basic gist of what you may wish to include. Keep in mind that, per PCI DSS 12.9.6, auditors love to hear about the post-mortem meeting you had to improve the process and make use of lessons learned.

Again, feel free to reach out to me via email for additional reporting guidelines.

Summary

Learn Incident Response

![]()

Learn Incident Response

There are so many good reasons to test your security incident response capability, compliance and audit requirement should be but one. Like most other activities, practice makes perfect. While perfection in incident response is unlikely, the experience acquired and lessons learned from regular testing speak for themselves. Go forth and test your incident response plan!